What COPPA Actually Means for Your Kid's AI Use

January 8, 2026

MyDD.ai is very intentional about how we handle all of the data we are entrusted with, especially our children’s personal information. There is one federal law in particular that is focused on protecting the privacy of children under 13 online called Children’s Online Privacy Protection Act (COPPA). MyDD.ai has very intentionally built our product to abide by this law. Here’s what it is and why it matters. We’ll also look at how the major AI chatbot providers approach your child’s privacy.

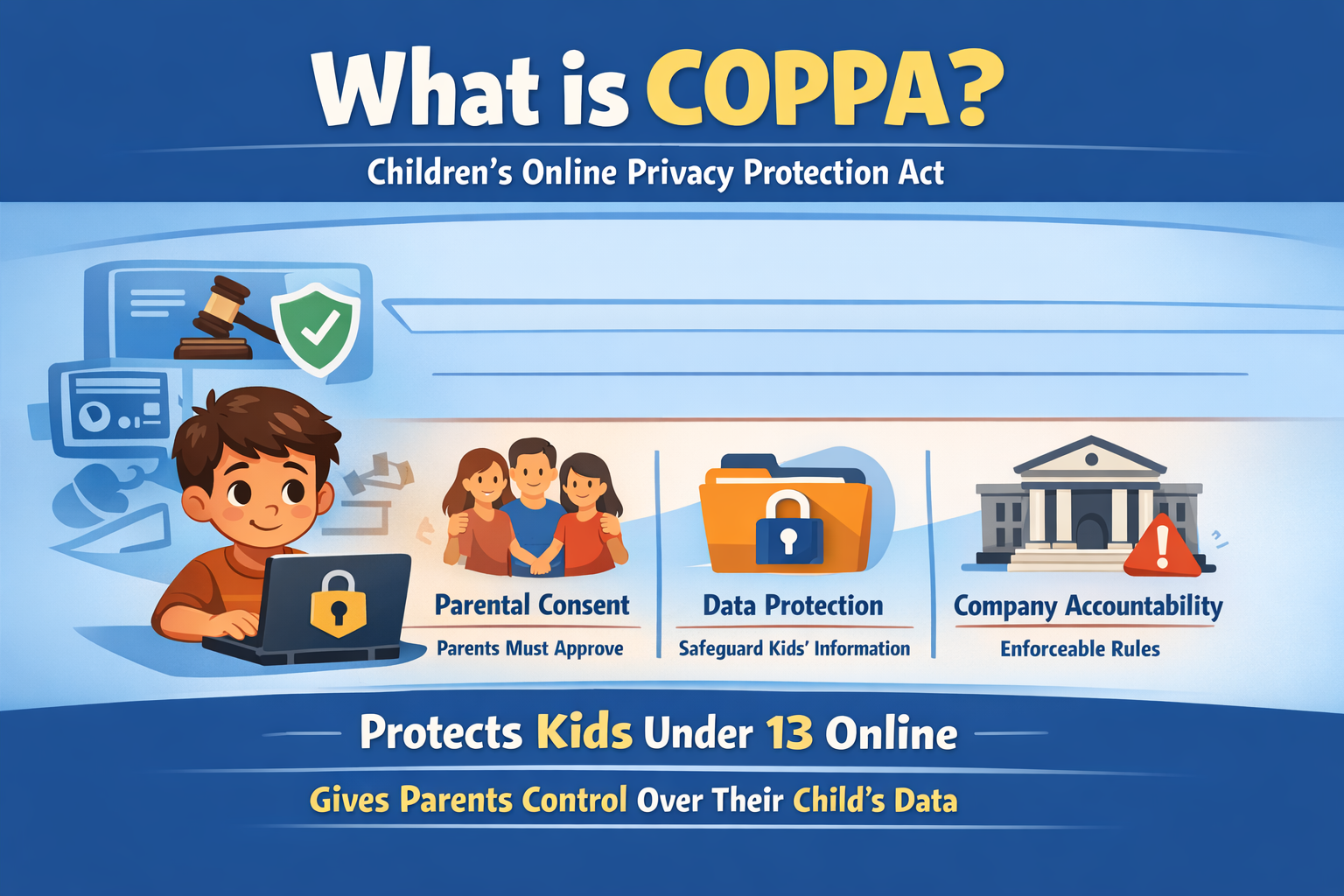

What is COPPA?

The Federal Trade Commission (FTC) protects children’s privacy online through the federal law commonly known as COPPA. This law protects children from exploitation online, empowers parents to be gatekeepers of their children’s information online, and creates accountability for companies providing these services.

Kids can’t meaningfully consent to data collection because they don’t grasp concepts like data monetization, targeted advertising, or identity theft. They’re also more susceptible to persuasive design tactics. Since children can’t protect themselves, COPPA shifts control to parents, who can make informed decisions about what data collection is appropriate for their family.

Before COPPA, there was little stopping businesses from treating children as easy targets for data harvesting. The law forces companies to treat children’s data with special care or face real consequences.

The broader philosophy is that childhood deserves protection from commercial exploitation. There is something fundamentally wrong with treating a seven year old the same way you’d treat an adult consumer. It’s the digital equivalent of laws restricting advertising to children or requiring parental consent for medical procedures.

Why should you care?

From a parent’s perspective, COPPA compliance signals something specific: that a company has made deliberate choices to treat your child differently than an adult user.

When a company follows COPPA, they’ve implemented verifiable parental consent. This means you actually had to do something to authorize your child’s access, not just hope they checked a box honestly during sign up. You have the right to review what data has been collected, delete it, and revoke consent at any time. The company can’t condition your child’s participation on collecting more data than necessary for the service to function.

What are the main labs doing?

Many sites, in their Terms of Service, prohibit children under 13 from using their platform, including the big chatbot providers. This is explicitly so they do not have to follow the COPPA provisions. Anyone younger than 13 who does use their site is violating their ToS (not that they are checking or doing anything to prevent them from coming online anyways).

OpenAI’s ToS explicitly state “Minimum age. You must be at least 13 years old or the minimum age required in your country to consent to use the Services. If you are under 18 you must have your parent or legal guardian’s permission to use the Services.”

Anthropic’s ToS take it further and do not let children under 18 use the product.

Google gets closest to our onboarding with their Family Link accounts. For personal accounts, however, children under 13 are still not allowed. Even with their Family Link product, they do not provide age-appropriate access to their chatbots.

Note: All ToS were accessed and verified in January 2026

When a company sets their age floor at 13 with self-declaration (like ChatGPT) or 18 (like Claude), they’re essentially treating it as outside their responsibility. It’s legal risk mitigation, not child protection.

The practical implication: if your 10-year-old lies about their age and something goes wrong, and they’re exposed to inappropriate content, they develop an unhealthy attachment to the AI, or their data gets harvested, you have no recourse. The company followed the law. You didn’t supervise adequately.